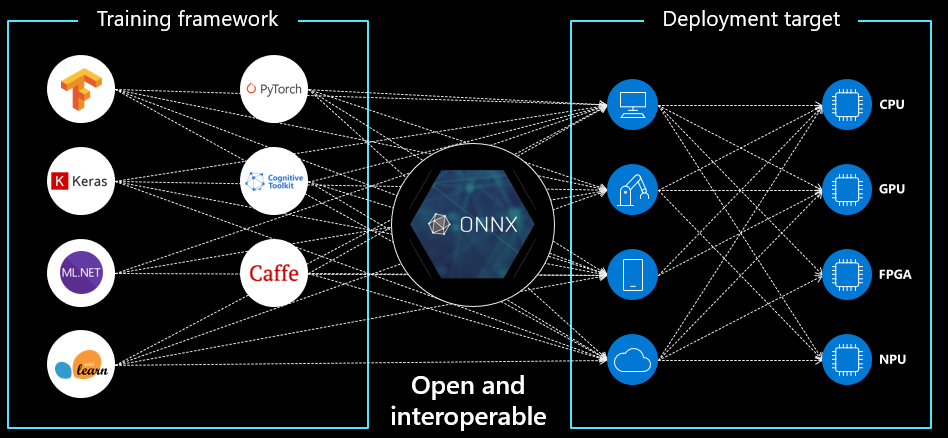

As ONNX embedded AI adoption accelerates across the industrial and tech sectors, open-source interoperability and performance optimization are transforming the way we deploy machine learning at the edge. As artificial intelligence (AI) and machine learning (ML) technologies continue to evolve at a rapid pace, the demand for open and flexible standards to support deployment on embedded systems has become increasingly vital. ONNX (Open Neural Network Exchange) emerges as a powerful standard that allows AI models to be transferred and deployed across various platforms and environments. Developed by leading technology firms, ONNX is now considered a top-tier solution for embedded AI—especially when paired with ONNX Runtime, which enables enhanced performance and scalability.

According to a recent report by Futurum Research, the global AI software and tools market was valued at $127.1 billion in 2024 and is projected to reach over $439.9 billion by 2029, with a compound annual growth rate (CAGR) of approximately 28.2%. This clearly signals the urgent demand for ONNX embedded AI applications across edge devices and embedded systems. In this context, adopting a standardized deployment approach like ONNX is not only a technical advantage—it’s a strategic move in long-term tech competitiveness.

The Future Roadmap of ONNX Embedded AI

ONNX is no longer seen merely as a model exchange format—it is rapidly becoming a standard across technology firms and electronics manufacturers alike. The adoption of ONNX embedded AI as the de facto framework allows for greater system compatibility, higher efficiency, and simplified scalability. This becomes particularly important as AI systems grow more complex and computationally demanding.

In practice, leading companies like Microsoft and Facebook AI Research have already adopted ONNX to implement large-scale AI models on embedded platforms, ranging from image recognition to natural language processing. This demonstrates ONNX’s strong compatibility with frameworks like PyTorch, TensorFlow, and Keras, and its robustness in real-time, high-accuracy applications such as industrial automation, surveillance, and smart city infrastructure.

Why ONNX + ONNX Runtime is the Technical Advantage

Combining ONNX with ONNX Runtime results in superior deployment performance for AI models on edge and embedded devices. ONNX Runtime enables model optimization across various hardware architectures—ranging from CPUs and GPUs to NPUs (Neural Processing Units)—minimizing both development time and costs.

ONNX Runtime is engineered to accelerate machine learning models through techniques like constant folding, graph fusion, and quantization. These optimizations significantly reduce inference latency and system resource consumption—critical advantages for low-power or size-constrained edge devices. Additionally, ONNX Runtime supports seamless deployment across diverse compute environments, ensuring the adaptability needed in real-world industrial and consumer applications.

Vietsol’s Competitive Edge in ONNX Embedded AI Solutions

With deep expertise in Edge AI and ML, Vietsol is actively leveraging ONNX and ONNX Runtime to develop powerful ONNX embedded AI solutions tailored for industrial and edge environments. Vietsol’s strength lies in its ability to customize and optimize AI models for highly specific use cases—ranging from real-time data processing in IoT devices to vision-based automation in smart factories.

A key enabler of this approach is the ONNX Model Zoo—a rich repository of pre-trained, production-ready AI models across image classification, object detection, NLP, and more. By building upon these open-source foundations, Vietsol accelerates R&D, reduces development overhead, and rapidly delivers embedded AI solutions compatible with the latest edge hardware.

Frequently Asked Questions (FAQ) About ONNX Embedded AI

Can ONNX replace TensorFlow or PyTorch?

No. ONNX serves as a unifying intermediate format, not a replacement. It enables models trained in frameworks like PyTorch, TensorFlow, or Scikit-learn to be exported and deployed across platforms with consistency and efficiency.

Why is ONNX suitable for embedded devices?

ONNX Runtime includes optimizations specifically designed for constrained environments. It delivers compact models, fast inference times, and low memory consumption—making it ideal for IoT, microcontrollers, and other edge devices.

Does ONNX support real-time inference?

Yes. With optimizations like graph pruning and quantization, ONNX Runtime delivers near real-time inference performance across various embedded and industrial platforms.

Is ONNX open source?

Yes. ONNX is an open-source initiative co-developed by Microsoft, Facebook, and other contributors. Its source code and documentation are freely available on GitHub and continuously maintained by a global developer community.

Conclusion

ONNX and ONNX Runtime are emerging as foundational standards for deploying scalable, high-performance AI models on embedded systems. As this ONNX embedded AI paradigm gains traction, companies like Vietsol are seizing the opportunity to lead in industrial AI by offering tailored, efficient, and future-ready embedded solutions.

Investing in Edge AI/ML and open standards like ONNX not only secures Vietsol’s competitive edge—it lays the groundwork for long-term, sustainable growth in manufacturing, mobility, and digital infrastructure.

Vietsol – Pioneering Embedded Intelligence for the Next Industrial Era.

Tiếng Việt

Tiếng Việt

RELATED NEWS

Security Testing: The Essential Role in Automotive Cybersecurity

The explosive rise of interconnected automotive technologies is transforming the driving experience like never before. From seamless keyless entry and smartphone apps that control in-vehicle functions, to Vehicle-to-Everything (V2X) systems that enable cars to communicate with infrastructure, other vehicles, and real-time data sources — today’s vehicles are smarter, safer, and...

What are Driver and Occupant Monitoring Systems (DMS/OMS)?

In the automotive industry, many safety incidents originate from human factors inside the vehicle cabin, such as driver distraction, drowsiness, or passengers not wearing seat belts. As a result, in-cabin monitoring and proactive responses to abnormal behaviors are becoming increasingly essential. The Driver Monitoring System (DMS) and Occupant Monitoring System...

Welcoming Didier Chenneveau as Senior Strategic Advisor to Vietsol – Driving Global Strategy in Edge AI and Mobility

Vietsol is proud to announce the appointment of Mr. Didier Chenneveau as Senior Strategic Advisor, marking a significant milestone in our mission to become a globally competitive engineering and technology company. With over 35 years of global leadership experience, Didier will play a key role in shaping Vietsol’s international strategy,...